|

Hide Matsuki I am a Research Engineer at Google within the GenXR team, part of Android XR. Our team conducts applied research on 3D GenAI and neural scene representations, led by Federico Tombari. . I completed my PhD at the Dyson Robotics Lab, Imperial College London, supervised by Prof. Andrew Davison. During my doctoral studies, I was a research intern at Google Zurich, hosted by Federico Tombari and Keisuke Tateno. Previously, I completed my Master's degree at the University of Tokyo. During my master's program, I had the opportunity to work at the Technische Universität München (TUM) with Prof. Daniel Cremers. Between my master's and PhD degree, I worked as a computer vision engineer at Artisense, a startup co-founded by Prof. Cremers. Email / Scholar / Twitter / Github

|

|

ResearchMy Ph.D. research focused on real-time computer vision and robotics, with a particular emphasis on visual SLAM. I am currently interested in sequential models for video input that enable scalable learning for embodied agents. Our team periodically hires interns and student researchers; please contact me if you are interested in working at Google’s Munich or Zurich offices. |

Publications |

|

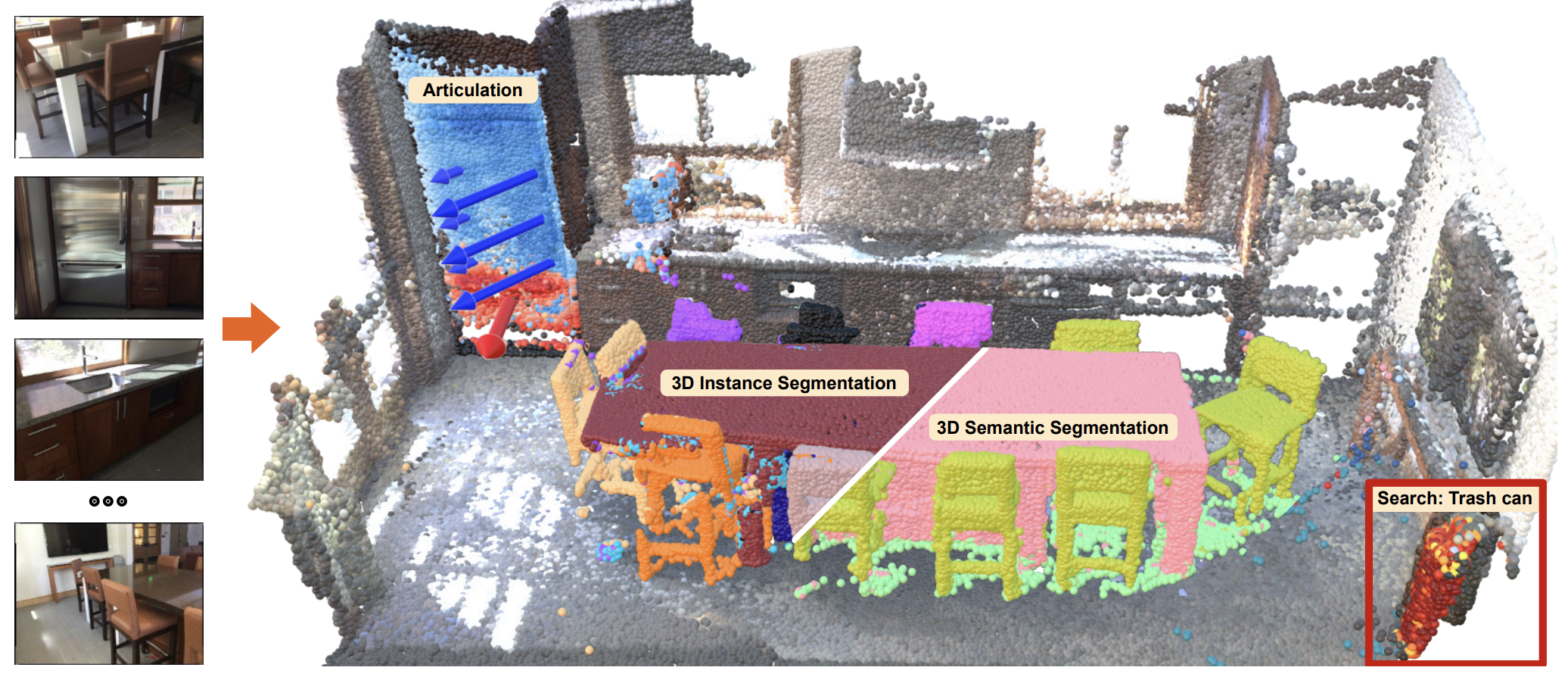

Unified Semantic Transformer for 3D Scene Understanding

Sebastian Koch, Johanna Wald, Hidenobu Matsuki, Pedro Hermosilla, Timo Ropinski, Federico Tombari Arxiv, 2025 project page / paper A single feed-forward model that unifies multiple 3D semantic tasks from RGB input. |

|

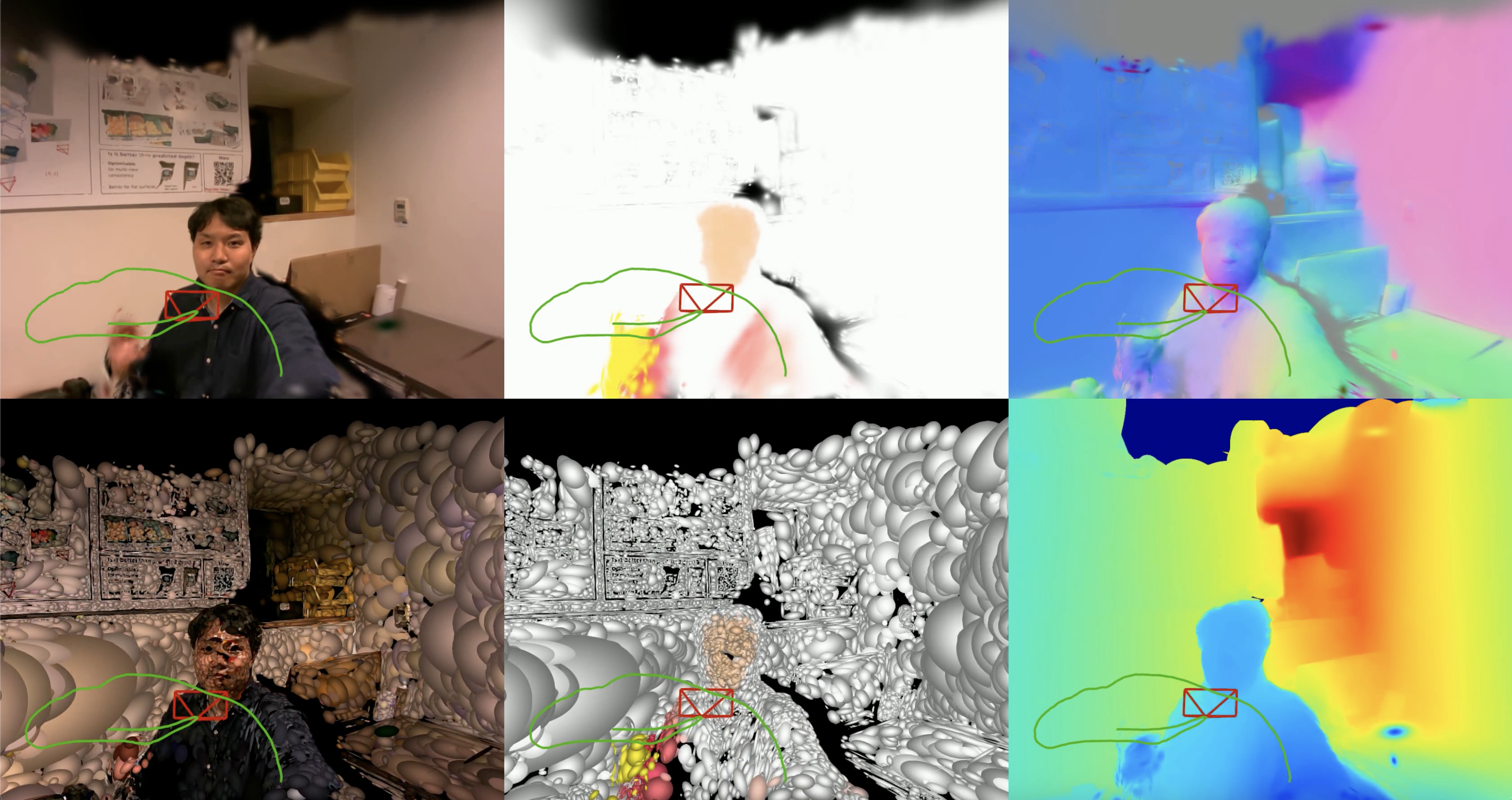

4DTAM: Non-Rigid Tracking and Mapping via Dynamic Surface Gaussians

Hidenobu Matsuki, Gwangbin Bae, Andrew J. Davison IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR), 2025 project page / video / paper 4D scene reconstruction and camera ego-motion estimation framework based on dynamic surface gaussians. |

|

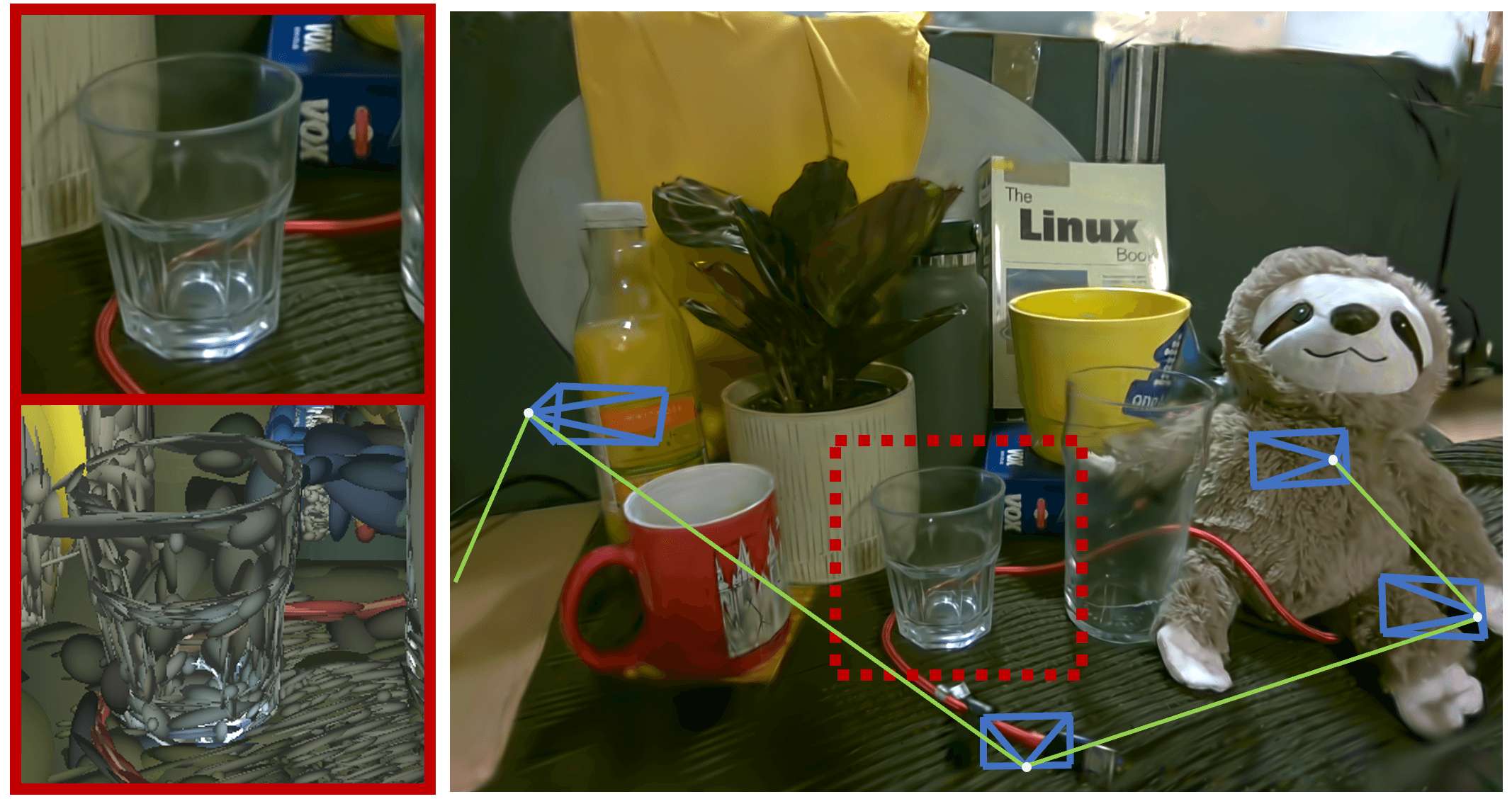

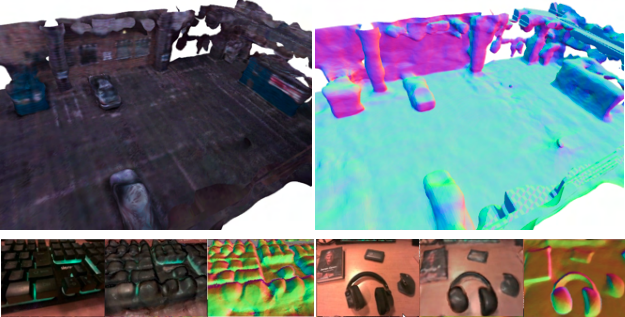

Gaussian Splatting SLAM

Hidenobu Matsuki*, Riku Murai*, Paul H.J. Kelly, Andrew J. Davison IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR), 2024 (Highlight, Best Demo Award) project page / video / paper The first Monocular SLAM using Gaussian Splatting as a master representation. The method also supports RGB-D mode. |

|

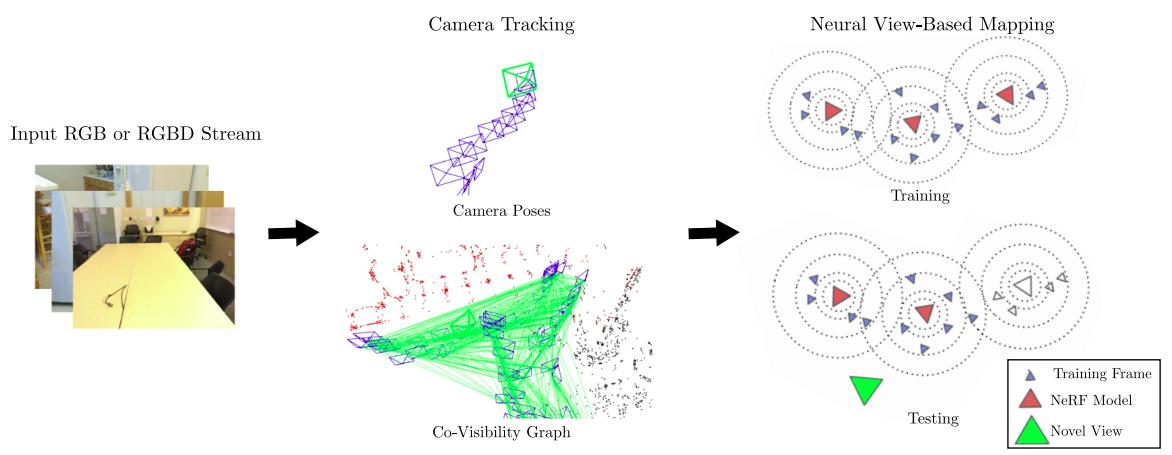

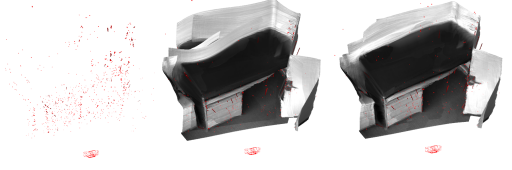

NEWTON: Neural View-Centric Mapping for On-the-Fly Large-Scale SLAM

Hidenobu Matsuki, Keisuke Tateno, Michael Niemeyer, Federico Tombari, IEEE Robotics and Automation Letters (RA-L), 2024 paper Multiple local Neural Fields for efficiently handling loop closure in large-scale SLAM. |

|

iMODE:Real-Time Incremental Monocular Dense Mapping Using Neural Field

Hidenobu Matsuki, Edgar Sucar, Tristan Laidlow, Kentaro Wada, Raluca Scona, Andrew J. Davison IEEE International Conference on Robotics and Automation (ICRA), 2023 (Oral Presentation, Best Navigation Paper Award Finalist) paper Generic real-time monocular dense mapping pipeline utilizing the smoothness prior inhereint in Multi Layer Perceptron. |

|

CodeMapping: Real-Time Dense Mapping for Sparse SLAM using Compact Scene Representations

Hidenobu Matsuki, Raluca Scona, Jan Czarnowski, Andrew J. Davison, IEEE Robotics and Automation Letters (RA-L), 2021 paper / video Real-time dense mapping framework with compact scene representations from generataive model. |

|

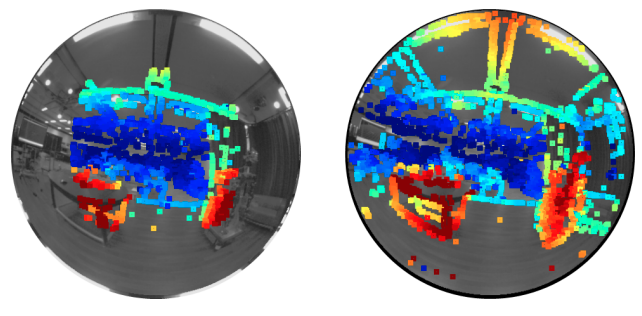

Omnirectional DSO: Direct Sparse Odometry with Fisheye Cameras

Hidenobu Matsuki, Lukas von Stumberg, Vladyslav Usenko, Jörg Stückler, Daniel Cremers IEEE Robotics and Automation Letters (RA-L), 2018 paper / video Real-time direct visual odometry for omnidirectional cameras |

Books |

|

|

SLAM Handbook: From Localization and Mapping to Spatial Intelligence Luca Carlone, Ayoung Kim, Frank Dellaert, Timothy Barfoot, Daniel Cremers Cambridge University Press, 2025 Book link I co-authored Chapter 14, “Map Representations with Differentiable Volume Rendering,” with Andy. |

|

コンピュータビジョン最前線 Summer 2025 (in Japanese) 井尻 善久 編・牛久 祥孝 編・片岡 裕雄 編・藤吉 弘亘 編・延原 章平 編 共立出版 2025 Book link I co-authored the chapter on 3D Gaussian Splatting with Keisuke Tateno. |

Talks |

|

Academic Service |

|

Misc |

| I am a big fan of Rugby, both for playing and watching. I played in an international match when I was in junior high school, and later competed in the Rugby Bundesliga while living in Germany (although it is a much smaller league than its soccer counterpart). I also enjoy drinking beer and whisky, traveling, and watching anime. |

|

|